This page describes highly available rook-ceph backed web content served from stateless nginx pods on kubernetes.

I’ve previously shown how to serve web traffic directly from ceph RGW object gateways - how is this different? Ceph RGW doesn’t provide a built-in browser like minio, so you’re stuck providing your own s3-compatible commandline or middleware app - none of which I’ve found that I really like. We can’t just stick an nginx deployment on top of a ceph rbd - because block devices in kubernetes can’t be mounted in ReadWriteMany mode. rbd PVCs must be mounted ReadWriteOnce[1], meaning only one pod can mount it, and (without intervention) kubernetes will wait indefinitely for the recovery of the pod upon node loss.

[1]: ReadOnlyMany may be supported, though the usefulness of such is debatable; I havent tried it.

CephFS natively supports ReadWriteMany, and rook provides a cephfs storageclass. This means we can map a cephfs mountpoint into a stateless nginx deployment, and simultaneously write to it with whatever we want. We could mount it into kubernetes cron job pods to update the contents, we could mount it in a simple browser app like filebrowser, we could dump jenkins artifacts to it, or I can simply mount it on my desktop. In addition, we can re-serve it with frontends for other protocols - for example, I have some IPMI devices that want to mount isos over SMB, and network switches that want to load firmware over tftp. Using the approach here, we can create one dumping ground for all firmware/isos/etc, and re-serve it as needed.

Using rook, we need to create the CephFilesystem and the StorageClass.

I can best show the resource manifests by diffing them from the examples in the rook git repo:

rook/deploy/examples# diff filesystem.yaml filesystem-storageclass-cephfs.yaml

10c10

< name: myfs

---

> name: storageclass-cephfs

21,22c21

< compression_mode:

< none

---

> compression_mode: aggressive

38,39c37

< compression_mode:

< none

---

> compression_mode: aggressive

44c42

< preserveFilesystemOnDelete: true

---

> preserveFilesystemOnDelete: false

rook/deploy/examples# git diff csi/cephfs/storageclass.yaml

diff --git a/deploy/examples/csi/cephfs/storageclass.yaml b/deploy/examples/csi/cephfs/storageclass.yaml

index c9f599a83..cc3490bc8 100644

--- a/deploy/examples/csi/cephfs/storageclass.yaml

+++ b/deploy/examples/csi/cephfs/storageclass.yaml

@@ -10,11 +10,11 @@ parameters:

clusterID: rook-ceph # namespace:cluster

# CephFS filesystem name into which the volume shall be created

- fsName: myfs

+ fsName: storageclass-cephfs

# Ceph pool into which the volume shall be created

# Required for provisionVolume: "true"

- pool: myfs-replicated

+ pool: storageclass-cephfs-replicated

# The secrets contain Ceph admin credentials. These are generated automatically by the operator

# in the same namespace as the cluster.

I pretty much just changed the names and enabled compression.

kubectl apply -f filesystem-storageclass-cephfs.yaml

kubectl apply -f csi/cephfs/storageclass.yaml

Now heres a terraform resource for the service, deployment, and pvc:

resource "kubernetes_service" "nginx" {

wait_for_load_balancer = "false"

metadata {

name = "nginx"

}

spec {

selector = {

app = "nginx"

}

port {

port = 8123

target_port = 80

}

type = "LoadBalancer"

external_ips = ["10.0.100.199"]

}

}

resource "kubernetes_deployment" "nginx" {

metadata {

name = "nginx"

}

spec {

replicas = 2

selector {

match_labels = {

app = "nginx"

}

}

template {

metadata {

labels = {

app = "nginx"

}

}

spec {

container {

image = "dceoy/nginx-autoindex"

name = "nginx"

volume_mount {

name = "data"

mount_path = "/var/lib/nginx/html"

read_only = "true"

}

}

volume {

name = "data"

persistent_volume_claim {

claim_name = "nginx-data"

}

}

}

}

}

}

resource "kubernetes_persistent_volume_claim" "nginx-data" {

metadata {

name = "nginx-data"

}

spec {

access_modes = ["ReadWriteMany"]

resources {

requests = {

storage = "64Gi"

}

}

storage_class_name = "rook-cephfs"

}

}

Its worth pointing out that even though this is a ReadWriteMany pvc, we’re only mounting it read_only in this http frontend.

I can mount this on a debian desktop with:

sudo mount -t ceph -o mds_namespace=storageclass-cephfs,name=my_user,secret=my_secret 10.0.200.2:6789,10.0.200.5:6789:/ /mnt

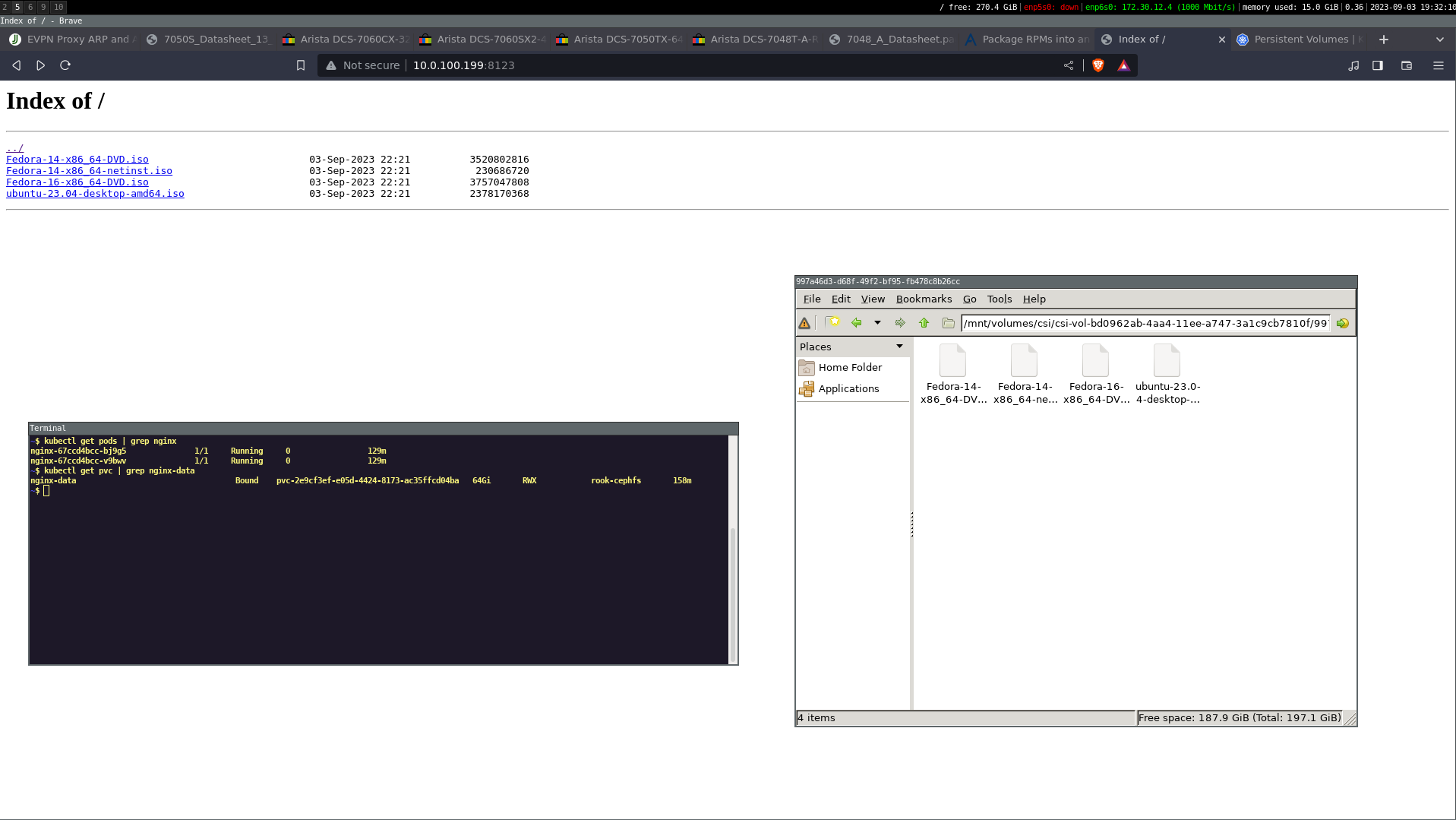

And heres the money shot:

Now I have a folder on my desktop where I can drop files, and they end up on a browsable web frontend backed by a resilient distributed system.