It’s commonly said that “linux switching (and routing) is slow”, but I’ve never seen anyone actually measure it in comparison with purpose-made networking gear.

I’ll be comparing a Trendnet TEG-S16g ethernet switch with a Lanner-made Denverton c3558 box with 8 1000base-t ports and dual channel ecc ddr4 running at 2133mhz. Frequency scaling is enabled and the cpus spend most of their time at 800mhz.

The Denverton box has no special sysctls set, and everything is mtu 1500. It is running nixos, and all relevant ports are put in a basic bridge. Everything is running kernel 6.6.68.

To compare these devices, I am running fio across them on a cephfs share. The client is my workstation, and there are three ceph nodes with one Samsung PM981a nvme drive each, and osd memory usage capped at 2GB. cephfs data and metadata are set to replica 2, so after a primary object is written - it has to make an additional network hop to write the secondary replica. All devices are connected with single 1000base-t ports.

In fio we’re using --direct=1 --rw=randrw --bs=4k for absolute worst-case scenario small synchronous io, which will be most sensitive to latency. Of course we easily can get line-speed with different parameters, but small sync io is what really affects storage-sensitive services.

Heres icmp across the linux switch. 172.30.190.198 is one of the ceph nodes.

ping -c100 172.30.190.198

--- 172.30.190.198 ping statistics ---

100 packets transmitted, 100 received, 0% packet loss, time 101357ms

rtt min/avg/max/mdev = 0.190/0.249/0.555/0.058 ms

[nix-shell:/nas]$ fio --filename=f --size=4GB --direct=1 --rw=randrw --bs=4k --runtime=600 --numjobs=8 --time_based --group_reporting --name=fio

fio: (g=0): rw=randrw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.38

Starting 8 processes

fio: Laying out IO file (1 file / 4096MiB)

Jobs: 8 (f=8): [m(8)][100.0%][r=1888KiB/s,w=1952KiB/s][r=472,w=488 IOPS][eta 00m:00s]

fio: (groupid=0, jobs=8): err= 0: pid=1040078: Wed Jan 1 16:17:46 2025

read: IOPS=451, BW=1807KiB/s (1851kB/s)(1059MiB/600011msec)

clat (usec): min=422, max=291844, avg=2270.30, stdev=1882.93

lat (usec): min=422, max=291845, avg=2270.88, stdev=1882.93

clat percentiles (usec):

| 1.00th=[ 594], 5.00th=[ 709], 10.00th=[ 816], 20.00th=[ 1074],

| 30.00th=[ 1500], 40.00th=[ 1926], 50.00th=[ 2278], 60.00th=[ 2507],

| 70.00th=[ 2671], 80.00th=[ 2933], 90.00th=[ 3359], 95.00th=[ 4228],

| 99.00th=[ 6390], 99.50th=[ 7898], 99.90th=[16712], 99.95th=[24249],

| 99.99th=[65799]

bw ( KiB/s): min= 144, max= 3568, per=99.99%, avg=1807.71, stdev=59.71, samples=9592

iops : min= 36, max= 892, avg=451.89, stdev=14.93, samples=9592

write: IOPS=451, BW=1806KiB/s (1849kB/s)(1058MiB/600011msec); 0 zone resets

clat (msec): min=6, max=408, avg=15.44, stdev= 8.00

lat (msec): min=6, max=408, avg=15.44, stdev= 8.00

clat percentiles (msec):

| 1.00th=[ 11], 5.00th=[ 11], 10.00th=[ 12], 20.00th=[ 13],

| 30.00th=[ 14], 40.00th=[ 14], 50.00th=[ 15], 60.00th=[ 16],

| 70.00th=[ 17], 80.00th=[ 18], 90.00th=[ 20], 95.00th=[ 22],

| 99.00th=[ 28], 99.50th=[ 32], 99.90th=[ 142], 99.95th=[ 211],

| 99.99th=[ 296]

bw ( KiB/s): min= 248, max= 2208, per=100.00%, avg=1806.28, stdev=28.30, samples=9592

iops : min= 62, max= 552, avg=451.53, stdev= 7.08, samples=9592

lat (usec) : 500=0.01%, 750=3.35%, 1000=5.50%

lat (msec) : 2=11.99%, 4=26.06%, 10=3.42%, 20=45.41%, 50=4.15%

lat (msec) : 100=0.03%, 250=0.06%, 500=0.01%

cpu : usr=0.15%, sys=0.62%, ctx=594192, majf=0, minf=61

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=271085,270847,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=1807KiB/s (1851kB/s), 1807KiB/s-1807KiB/s (1851kB/s-1851kB/s), io=1059MiB (1110MB), run=600011-600011msec

WRITE: bw=1806KiB/s (1849kB/s), 1806KiB/s-1806KiB/s (1849kB/s-1849kB/s), io=1058MiB (1109MB), run=600011-600011msec

Heres the trendnet switch:

ping -c100 172.30.190.198

--- 172.30.190.198 ping statistics ---

100 packets transmitted, 100 received, 0% packet loss, time 101372ms

rtt min/avg/max/mdev = 0.121/0.174/0.597/0.051 ms

[nix-shell:/nas]$ fio --filename=f --size=4GB --direct=1 --rw=randrw --bs=4k --runtime=600 --numjobs=8 --time_based --group_reporting --name=fio

fio: (g=0): rw=randrw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

...

fio-3.38

Starting 8 processes

fio: Laying out IO file (1 file / 4096MiB)

Jobs: 8 (f=8): [m(8)][100.0%][r=1753KiB/s,w=1733KiB/s][r=438,w=433 IOPS][eta 00m:00s]

fio: (groupid=0, jobs=8): err= 0: pid=1040538: Wed Jan 1 16:42:07 2025

read: IOPS=472, BW=1890KiB/s (1935kB/s)(1107MiB/600009msec)

clat (usec): min=341, max=182487, avg=2120.51, stdev=1491.64

lat (usec): min=341, max=182488, avg=2121.04, stdev=1491.65

clat percentiles (usec):

| 1.00th=[ 490], 5.00th=[ 586], 10.00th=[ 701], 20.00th=[ 955],

| 30.00th=[ 1385], 40.00th=[ 1811], 50.00th=[ 2212], 60.00th=[ 2442],

| 70.00th=[ 2606], 80.00th=[ 2802], 90.00th=[ 3097], 95.00th=[ 3916],

| 99.00th=[ 6063], 99.50th=[ 7242], 99.90th=[15008], 99.95th=[18220],

| 99.99th=[37487]

bw ( KiB/s): min= 296, max= 3656, per=100.00%, avg=1891.01, stdev=57.69, samples=9590

iops : min= 74, max= 914, avg=472.70, stdev=14.42, samples=9590

write: IOPS=472, BW=1889KiB/s (1935kB/s)(1107MiB/600009msec); 0 zone resets

clat (msec): min=7, max=423, avg=14.81, stdev= 5.45

lat (msec): min=7, max=423, avg=14.81, stdev= 5.45

clat percentiles (msec):

| 1.00th=[ 10], 5.00th=[ 11], 10.00th=[ 12], 20.00th=[ 13],

| 30.00th=[ 13], 40.00th=[ 14], 50.00th=[ 15], 60.00th=[ 15],

| 70.00th=[ 16], 80.00th=[ 17], 90.00th=[ 19], 95.00th=[ 21],

| 99.00th=[ 27], 99.50th=[ 29], 99.90th=[ 37], 99.95th=[ 93],

| 99.99th=[ 236]

bw ( KiB/s): min= 256, max= 2288, per=99.99%, avg=1889.69, stdev=20.61, samples=9592

iops : min= 64, max= 572, avg=472.37, stdev= 5.15, samples=9592

lat (usec) : 500=0.67%, 750=5.73%, 1000=4.21%

lat (msec) : 2=11.72%, 4=25.37%, 10=2.90%, 20=46.25%, 50=3.12%

lat (msec) : 100=0.01%, 250=0.02%, 500=0.01%

cpu : usr=0.15%, sys=0.62%, ctx=591143, majf=0, minf=73

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=283504,283382,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=1890KiB/s (1935kB/s), 1890KiB/s-1890KiB/s (1935kB/s-1935kB/s), io=1107MiB (1161MB), run=600009-600009msec

WRITE: bw=1889KiB/s (1935kB/s), 1889KiB/s-1889KiB/s (1935kB/s-1935kB/s), io=1107MiB (1161MB), run=600009-600009msec

icmp from .249ms to .174ms is 30% faster.

Interestingly iops from 451 to 472 is only 4.4% faster. Write latency went down .63ms from 15.44 to 14.81.

Why is there only a marginal improvement in iops?

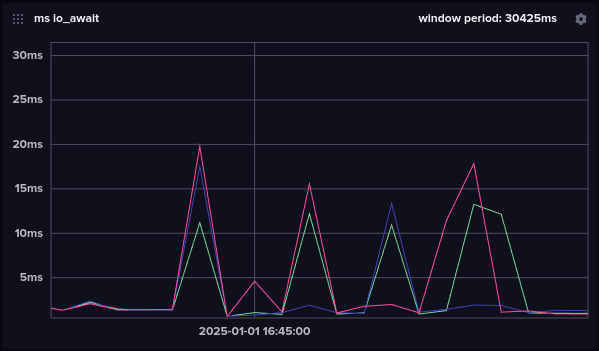

This is the io_await of the osd disks, which telegraf defines as “The average time per I/O operation”.

The .075ms improvement in network latency is somewhat insignificant compared to these latency spikes of 10ms-20ms.

Why are they not consistent? My guess is that this is the firmware shuffling bits around, causing everything to slow down for a bit. If you look at ssd reviews at a place like storagereview.com, you’ll see its very common for write performance to do all kinds of strange things like this under sustained pressure.

While 30% improvement in the network sounds like it would have a bigger impact, if we really want to improve the performance of latency-sensitive clustered services like ceph storage (or really any persistent database), the latency of the devices at the very end of the datapath is likely more important - to a point. It would be interesting to now compare all this with dram-less drives or enterprise U.2 drives.